Hyderabad’s organisations handle text at scale: customer feedback, call transcripts, delivery notes, invoices, and civic notices. Turning these messy strings into structured signals is a daily task for analysts and engineers. Regular expressions—often shortened to regex—offer a compact language for describing text patterns so they can be searched, extracted, and validated quickly.

Regex is not a silver bullet, but it excels at repetitive, well-defined parsing jobs. With a few carefully chosen patterns and robust tests, teams can clean inputs, enrich datasets, and automate tedious steps that otherwise clog analysis. This article explains practical regex concepts and Python techniques with examples relevant to the city’s mix of sectors.

Why Regex Matters for Hyderabad’s Data Workflows

Local datasets often blend English with regional languages, mixed punctuation, and idiosyncratic abbreviations. Delivery addresses may contain landmarks instead of postcodes, and SMS logs can mix dates, numbers, and transaction codes in one line. Regex helps tame this variety by matching structure rather than exact words.

When paired with clear documentation and comprehensive tests, regex patterns become a valuable shared resource. For example, colleagues can reuse a tested extractor for invoice numbers, GSTINs, or vehicle registrations without repeatedly developing fragile string logic. This approach is especially beneficial for professionals taking a Data Analytics Course in Hyderabad, as it enhances their capability to handle data extraction tasks efficiently.

Core Concepts: Literals, Metacharacters, and Escaping

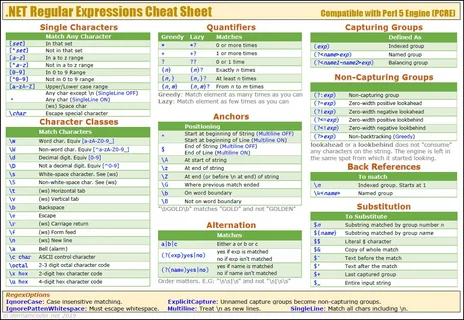

A regex is built from literal characters and metacharacters that represent sets or positions. Characters like. match any character, * means “repeat”, and [] defines character classes. Because some characters have special meaning, escaping with a backslash tells the engine to treat them literally.

In practice, start with the smallest pattern that does the job. Overly broad expressions may pass tests, but later capture junk when inputs drift. Precision and clarity beat cleverness in long-lived pipelines.

Character Classes and Quantifiers

Character classes such as [A-Z], [0-9], and \w match sets of characters, while negated classes like [^,] match anything except the comma. Quantifiers control repetition: + means one or more, * means zero or more, and {m,n} defines explicit bounds. Combining classes and quantifiers yields compact yet readable patterns.

Use lazy quantifiers like *? when you need the shortest match, such as capturing text between two markers without swallowing too much. Greedy defaults are powerful but can surprise newcomers if not constrained.

Practical Extractors for Local Formats

Consider Indian GSTINs, vehicle registrations, or IFSC codes. A concise regex can validate a form without revealing sensitive values downstream. Similarly, invoice IDs often follow predictable prefixes and numerals that are easy to extract once written carefully with anchors and quantifiers.

For addresses, capture blocks like house number, road, locality, and pin code using optional groups. Keep patterns tolerant of spacing and punctuation, but avoid making everything optional; balanced strictness yields fewer false positives.

Cleaning Noisy Logs and Chat Transcripts

Operational logs and customer chats mix timestamps, codes, and narrative text. Regex can isolate ISO-like dates, transaction IDs, or error codes, enabling joins with reference tables. Named captures save context for downstream analysis and reduce brittle string slicing.

Use non-capturing groups (?:…) when a structure is required but you do not need the value. This keeps match tuples simple and reduces memory use in large jobs.

De-identification and Masking

When handling personal data, mask or remove identifiers before sharing extracts. Regex makes it easy to replace email usernames with placeholders, redact telephone middle digits, or scrub national identifiers when present. Pair masking with logs that show counts changed, not the values themselves.

Auditable masking protects citizens and organisations while preserving analytical utility. Clear policies and tests keep the practice consistent across teams and suppliers.

Performance and Safety Considerations

Poorly bounded patterns can trigger catastrophic backtracking, where the engine tries huge numbers of combinations. Prefer explicit quantifiers and atomic constructs over nested wildcards. In critical paths, test patterns with adversarial inputs to ensure performance stays predictable under stress.

For very large files, stream line by line and avoid loading everything into memory. Compiled patterns and compiled replacement functions often produce large wins at minimal complexity.

Testing, Fixtures, and Property-Based Checks

Unit tests should cover typical cases, edge cases, and malformed inputs. Fixture strings in multiple languages and encodings help catch hidden assumptions. Property-based testing can generate random strings that still satisfy high-level rules, exposing fragility before it affects users.

A small “regex cookbook” in your repository—snippets for dates, money, IDs, and headings—accelerates work and reduces duplication. Shared assets help newcomers avoid common mistakes and encourage consistent style.

Governance, Documentation, and Review

Treat patterns as code: version them, document intent, and record examples that should match or fail. Code review should check for clarity, boundedness, and ethical handling of personal data. When patterns enforce policy—like blocking certain words—log their use and monitor for unintended bias.

Explain patterns in plain language near the code. When future readers know what the author meant to catch, updates are safer and faster.

Skills and Learning Pathways

Teams thrive when everyone shares a working knowledge of regex syntax, Unicode pitfalls, and safe replacement techniques. Short clinics on developer tools—network panels, page inspectors, and text editors with regex support—pay off quickly in productivity and quality. For structured, hands‑on progression from ad‑hoc scripts to dependable text pipelines, a data analyst course can provide curated exercises, peer review, and feedback loops that build confidence.

Learning sticks when tied to delivery. Small pilots—such as cleaning a ticket queue or standardising SMS receipts—turn abstract ideas into habits that scale across products and departments.

Local Ecosystem and Hiring

Hyderabad’s employers value portfolios that demonstrate disciplined text handling over screenshots of dashboards. Repositories with tidy tests, clear READMEs, and sensible performance notes speak louder than tool lists. For candidates seeking place-based mentoring and projects aligned to local sectors, a Data Analytics Course in Hyderabad can connect students to realistic datasets such as retail catalogues, service tickets, and civic notices.

This context builds judgment about spacing, punctuation norms, and multilingual quirks that purely generic training often misses. Local insight turns regex from a trick into a practical craft.

Conclusion

Regex gives Hyderabad’s analysts a precise, reusable way to turn messy text into structured data. With careful use of classes, anchors, groups, and flags—plus robust tests and documentation—teams can clean inputs, extract meaning, and automate routine tasks at scale. For those keen to deepen these skills with guided projects and review, a data analyst course can accelerate progress while keeping practice safe and sustainable.

ExcelR – Data Science, Data Analytics and Business Analyst Course Training in Hyderabad

Address: Cyber Towers, PHASE-2, 5th Floor, Quadrant-2, HITEC City, Hyderabad, Telangana 500081

Phone: 096321 56744